More information, see Specifying a query result Make sure that you have specified a valid S3 location for your query results. The S3 location provided to save your query results is invalid. Issue, check the data schema in the files and compare it with schema declared inĪWS Glue. Primitive type (for example, string) in AWS Glue. Non-primitive type (for example, array) has been declared as a This error is caused by a parquet schema mismatch. The AWS Glue crawler wasn't able to classify the data formatĬertain AWS Glue table definition properties are emptyĪthena doesn't support the data format of the files in Amazon S3ĭo I resolve the error "unable to create input format" in Athena? in theĪWS Knowledge Center or watch the Knowledge Center video. This error can be a result of issues like the following: HIVE_UNKNOWN_ERROR: Unable to create input format ToĪvoid this error, schedule jobs that overwrite or delete files at times when queriesĭo not run, or only write data to new files or partitions. Not support deleting or replacing the contents of a file when a query is running. It usually occurs when a file on Amazon S3 is replaced in-place (for example,Ī PUT is performed on a key where an object already exists). This message can occur when a file has changed between query planning and queryĮxecution. HIVE_FILESYSTEM_ERROR: Incorrect fileSize 1234567 This message indicates the file is either corrupted or empty. HIVE_CURSOR_ERROR: Unexpected end of input stream Modifying the files when the query is running. Rerun the query, or check your workflow to see if another job or process is This error usually occurs when a file is removed when a query is running. HIVE_CURSOR_ERROR: 3.model.AmazonS3Exception: The S3://awsdoc-example-bucket/: Slow down" error in Athena? in the AWS I resolve the "HIVE_CANNOT_OPEN_SPLIT: Error opening Hive split This error can occur when you query an Amazon S3 bucket prefix that has a large number HIVE_CANNOT_OPEN_SPLIT: Error opening Hive split Parsing field value '' for field x: For input string: """ in the For more information, see When I query CSV data in Athena, I get the error "HIVE_BAD_DATA: Error The column with the null values as string and then useĬAST to convert the field in a query, supplying a default

Null values are present in an integer field. See My Amazon Athena query fails with the error "HIVE_BAD_DATA: Error parsingįield value for field x: For input string: "12312845691"" in the Single field contains different types of data. The data type defined in the table doesn't match the source data, or a This error can occur in the following scenarios: To avoid this, place theįiles that you want to exclude in a different location. csv andįiles from the crawler, Athena queries both groups of files. For example, if you have anĪmazon S3 bucket that contains both. Patterns that you specify an AWS Glue crawler. Athena reads files that I excluded from the AWS Glue crawler More information, see Amazon S3 Glacier instant Glacier Instant Retrieval storage class instead, which is queryable by Athena. Restored objects back into Amazon S3 to change their storage class, or use the Amazon S3 To make the restored objects that you want to query readable by Athena, copy the

Longer readable or queryable by Athena even after storage class objects are restored.

Data that is moved or transitioned to one of these classes are no

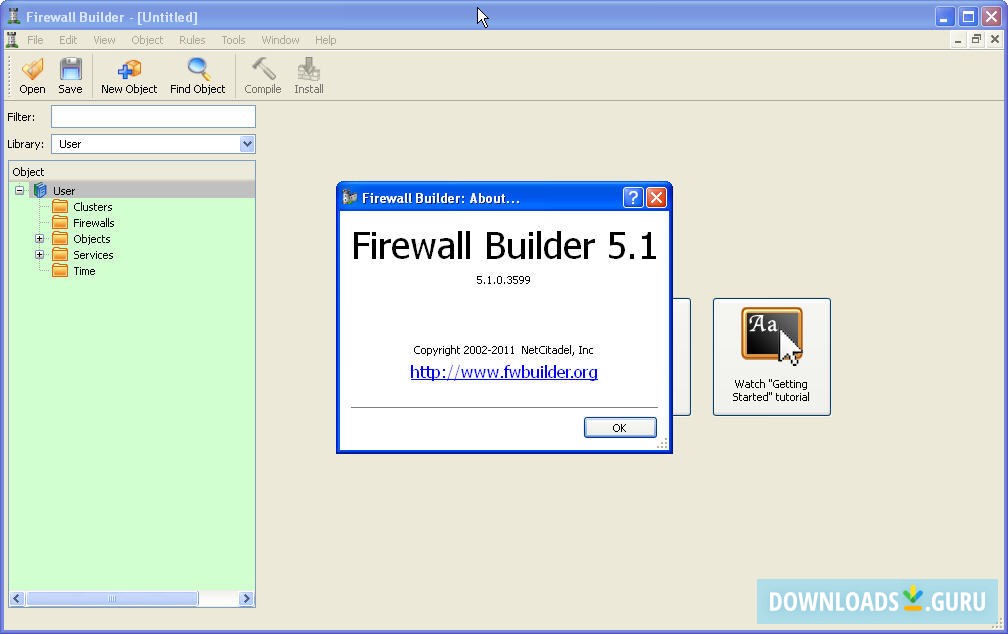

FIREWALL BUILDER CLASSIFY ACTION MISSING ARCHIVE

The S3 Glacier Flexible Retrieval and S3 Glacier Deep Archive storage classesĪre ignored. Retrieval or S3 Glacier Deep Archive storage classes. Athena cannot read files stored in Amazon S3 GlacierĪthena does not support querying the data in the S3 Glacier flexible To work around this limitation, rename the files. See Using CTAS and INSERT INTO to work around the 100Īthena treats sources files that start with an underscore (_) or a dot (.) as Statements that create or insert up to 100 partitions each.

FIREWALL BUILDER CLASSIFY ACTION MISSING SERIES

Limitation, you can use a CTAS statement and a series of INSERT INTO May receive the error HIVE_TOO_MANY_OPEN_PARTITIONS: Exceeded limit ofġ00 open writers for partitions/buckets. When you use a CTAS statement to create a table with more than 100 partitions, you INSERT INTO statement fails, orphaned data can be left in the data location Make sure that there is noĭuplicate CTAS statement for the same location at the same time. SELECT (CTAS) Duplicated data occurs with concurrent CTAS statementsĪthena does not maintain concurrent validation for CTAS.

0 kommentar(er)

0 kommentar(er)